Color Differences Between Cameras

Winter Sale!

All LibRaw Products and Bundles - 25% off

Our Special Prices are valid until January 29, 2026.

Quite often, when a new camera emerges on the market one heavily-discussed subject is if the color it records is the same, better, or worse compared to a previous model. It often happens that the color is compared based on the rendering that some RAW converter provides. Thus, an unknown variable, that being the color profiles or transforms that a RAW converter uses for these particular models, comes into play. Yet another problem with such comparisons is that they are usually made based on shots taken with different lenses, under different light, and with effectively different exposures in RAW (while the exposure settings may be the same).

Let's see how cameras compare in RAW if the set-up is kept very close to the same and the exposure is effectively equalized in RAW. It must be mentioned that light sources tend to age, so if the shots aren't taken very close in time to each other, chances are good that some of the differences between them can be attributed to the aging of the light sources.

DPReview keep a pretty good studio set-up, so let's take their studio scenes, made with 4 Canon cameras (Canon 5D Mark III, Canon 5D Mark IV, Canon 5DS, Canon 5DSr), and feed them to RawDigger (Profile Edition or Trial).

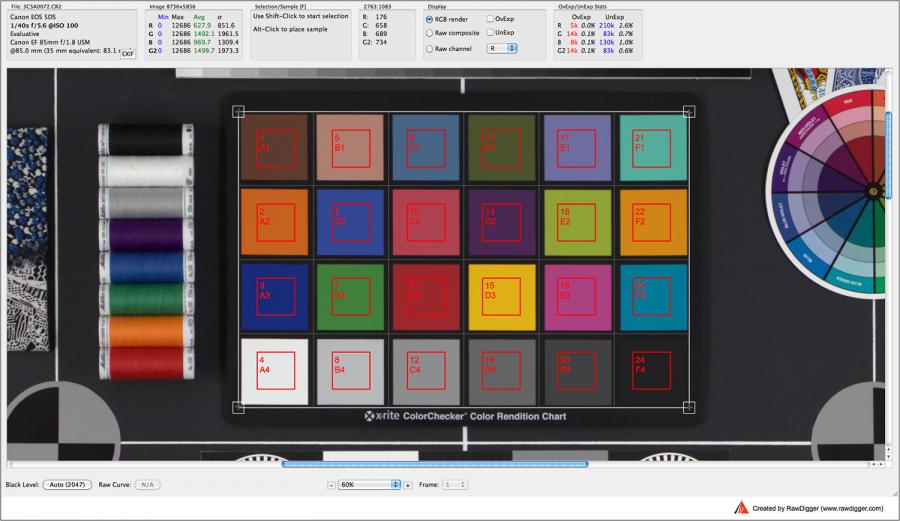

In RawDigger, we use a 4x6 grid to extract average RAW values from 24 patches of Color Checker...

RawDigger, grid placement.

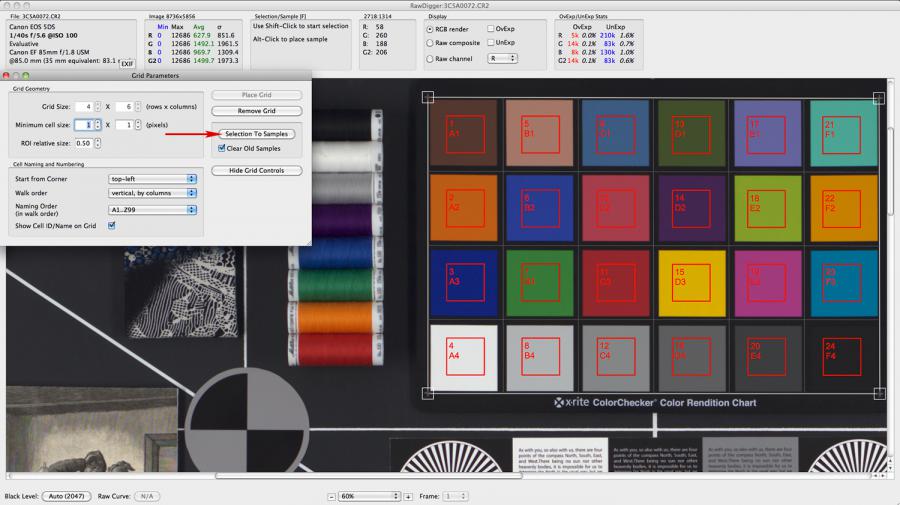

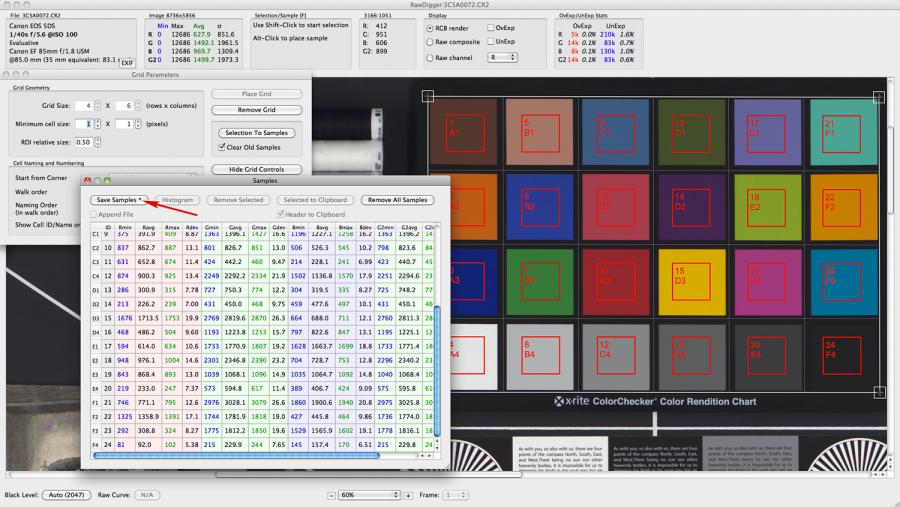

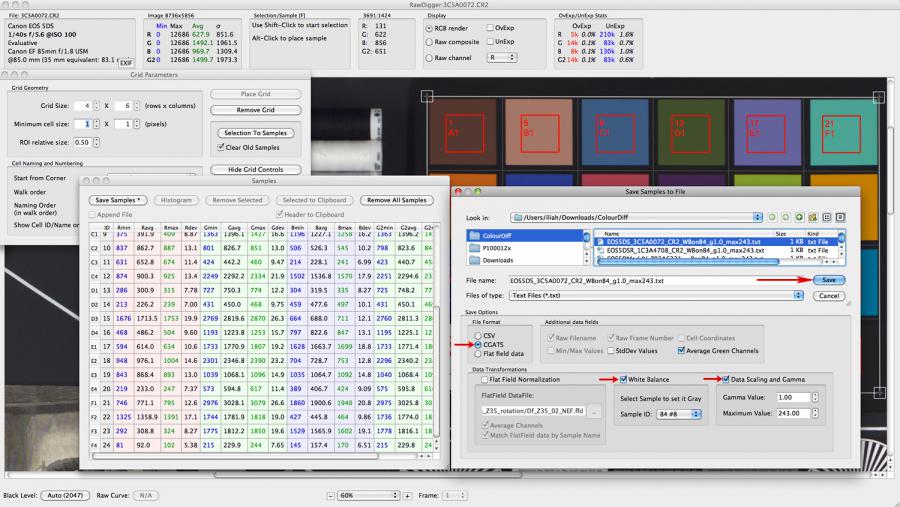

...and export text files (CGATS), normalizing the exposure (data scaling) by the whitest patch (A4) and applying white balance from the most neutral patch (B4) (see three pictures below).

RawDigger, converting Selection to Samples

RawDigger, Save Samples

RawDigger, writing Samples to File

It's important to mention that we don't apply any forms of data manipulation here beyond black level subtraction, normalization, and white balancing. For detailed information on how to work with the grid in RawDigger please read this article.

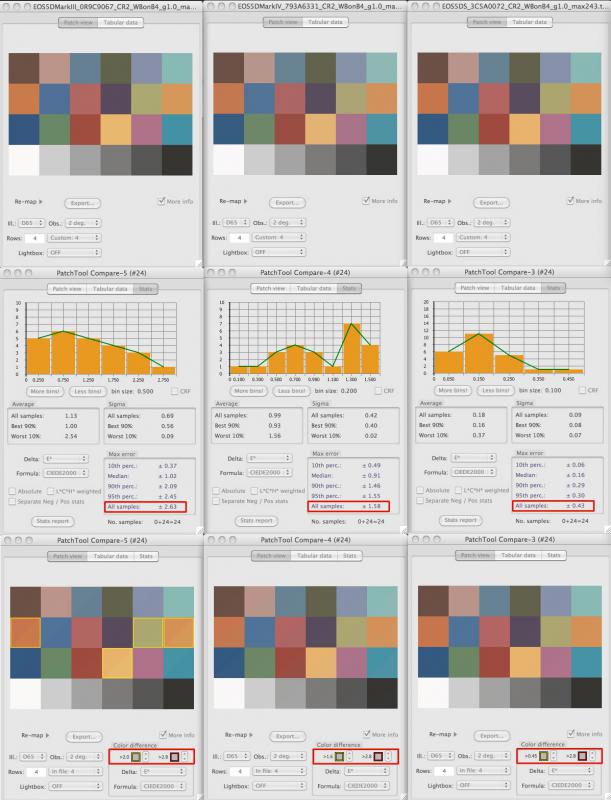

Opening the resulting files in BabelColor PatchTool, we can compare the results (we used the 5DSr as the reference, comparing the other cameras to it). From the screenshots below, you can see that the difference is very small (because the difference is small we can use any reasonable conversion to Lab for the sake of computing deltaE -- we converted here through Adobe RGB gamma 1; alternatively, you can build matrix transforms and compare them directly), nothing a decent color profile can't handle.

PatchTool. Comparing Canon 5D Mark III, Canon 5D Mark IV and Canon 5DS to Canon 5DSr: DeltaE, Histograms, Visualisation of the differences in color

It's considered that deltaE = 1 is "just noticeable difference" (often referred to as JND), and in most cases, deltaE = 2 is all we can hope for from a very accurate color transform. So, any way you look at it, the maximum difference of deltaE = 2.63, with only 4 patches having a difference exceeding the deltaE = 2 that we found between 5D Mark III and 5DSr (and taking into account the time between the shots is close to 2 years), can't seriously considered a "different color".

The light sources DPReview use for this scene are rated at 5500K, and one of the regular Canon White Balance presets in CR2 files, 5600K, is pretty close to this. Let's have a look at the white balance coefficients for this preset in FastRawViewer (white balance coefficients, outlined in red, follow RGB sequence).

FastRawViewer. Canon 5DSr. White Balance coefficients for CCT=5600K: (Kr, Kg, Kb) = (2.36, 1.00, 1.64)

FastRawViewer. Canon 5D Mark III. White Balance coefficients for CCT=5600: (Kr, Kg, Kb) = (2.03, 1.00, 1.38)

FastRawViewer. Canon 5D Mark IV. White Balance coefficients for CCT=5600: (Kr, Kg, Kb) = (2.09, 1.00, 1.55)

FastRawViewer. Canon 5DS. White Balance coefficients for CCT=5600: (Kr, Kg, Kb) = (2.28, 1.00, 1.64)

The values for the white balance preset are, mostly, pretty different between the cameras we looked at. This difference may be caused by several factors, including the filter properties, microlens collection abilities, gain controls, and even changes and improvements to the responsivity to certain ranges of spectrum of the photodiodes themselves. But, as we saw, after normalization and white balancing, the difference in color is not anything to fret about.

Comments

N/A (not verified)

Tue, 10/18/2016 - 09:36

Permalink

> Opening the resulting files

> Opening the resulting files in BabelColor PatchTool

opening RGB DNs in CGATS as coordinates in ProPhoto color space, right ?

Iliah

Tue, 10/18/2016 - 10:35

Permalink

ProPhoto will over-saturate

ProPhoto will over-saturate the targets, what was used for the above is AdobeRGB with gamma = 1. But checking with ProPhoto, the largest difference is still less then 3 dE00.

Anonymous (not verified)

Tue, 10/18/2016 - 11:33

Permalink

> ProPhoto will over-saturate

> ProPhoto will over-saturate the targets

same RGB DNs used as coordinates with the wider gamut colorspace = more saturated color with narrower gamut = less saturated color... I see ... but then it is a trick where you in fact manipulate the results by using some color space at will, no ? is there any possible metric that can be used on DNs w/o color space assignment ? something like sqrt ( ( R1 - R2 )^2 + (G1-G2)^2 + (B1-B2)^2 )

Iliah

Tue, 10/18/2016 - 11:39

Permalink

Yes, there is such an RGB

Yes, there is such an RGB-based metric. Problem is, it has no colorimetric or perceptual interpretation. With differences as small as we have here, I do not see how the choice of using AdobeRGB transform is limiting.

Anonymous (not verified)

Tue, 10/18/2016 - 11:57

Permalink

> Problem is, it has no

> Problem is, it has no colorimetric or perceptual interpretation.

but do we need such interpretation at all ? if one is trying to exclude the color transform influence ("camera profiles") from comparison then one actually does not want that interpretation at all and can assume that the data before color transform shall be sufficiently close in that RGB DNs "space" for cameras that are not designed to have different CFAs (or different CFA but resulting in somewhat same total light under a specific illumination transmitted to "R", "G1/G2", "B" sensels in the same spot for different cameras - but then in this case under a different spectrum we shall see more different results - which btw means that your example might gain from comparison under 2 different spectrums... pity that test sites don't publish same raws same scene shot under some tungsten for example, no ?)

Iliah

Tue, 10/18/2016 - 12:44

Permalink

Yes, we do need this

Yes, we do need this interpretation, because it allows to weight the differences and ignore minor differences in data which do not result in significantly different colour. You are welcome to compare necessary colour transforms, too.

Ben Goren (not verified)

Mon, 12/12/2016 - 11:20

Permalink

Matches what I've seen

For what it's worth, when building spectral models of Canon camera sensors, I've been struck by the at least superficial similarity between them. I haven't done any objective comparisons between models, but I'd bet a cup of coffee that there's about as much variation from one serial number of the same model to another as there is between different models -- and any such variation is going to be overwhelmed by the differences between successive firings of the same flash.

Photography perfectly exemplifies that old adage about measuring with a micrometer, marking with chalk, and cutting with an axe. People get all excited about a new sensor with 13 stops of dynamic range which is so much better than the old-and-busted model that only had 11.5 stops...and are completely oblivious to the four stops of veiling glare and mirror box reflections from the Sun hitting the front element of the lens. (Indeed, is there _any_ modern real-world situation in which a camera's sensor is what limits a photographic capture's dynamic range?) They ooh and aah and gush over the magical color of this-or-that lens...and then put a "protective" filter over it that alters the spectral output more than the difference caused by the lens they don't like -- not to mention the color cast from that billboard looming behind them as they shoot.

Even in a studio setting...many studios are so poorly set up that a photographer's choice of clothing is going to have more of an impact on the final color rendition than any gear selection choices available to mere mortals.

On top of it all...within certain limits that basically nobody every comes close to approaching, _all_ color variations can be normalized with a sufficient ICC-based RAW workflow. I've made studio pictures using a compact fluorescent blacklight such that everything looks exactly as if shot under D50 save for things that actually fluoresce. (When I pressed the shutter, the scene I saw before me looked like a cheesy teenager's party.) As in, a ColorChecker Passport looks exactly as it should, save that the laser-cutout logo and similar text is much brighter and bluer than otherwise because those bits are heavily laced with fluorescent whiteners.

My color advice in general is that most photographers should focus on the painterly aesthetics of the image once it's in the camera. Only those doing art reproduction or certain types of product photography should worry about color accuracy, and they need to be prepared to chase the rabbit _very_ far down the hole. But, for most people, the very-much-NOT-colorimetric tools everybody uses are pretty well suited to artistic expression as typically practiced.

Cheers,

b&

LibRaw

Mon, 12/12/2016 - 11:36

Permalink

Pretty much same experience

Pretty much same experience here, Ben. Difference in electronic designs and sample variation are more important. CFA recepies is what really affects colour, and the number of those recepies in use is very limited.

--

Best,

Iliah

Emmett (not verified)

Sun, 03/01/2020 - 11:56

Permalink

colour space data

Is there any chance to have a look at the a few sensor colour space data vs reference data in CSV format?

LibRaw

Sun, 03/01/2020 - 12:19

Permalink

Dear Sir:

Dear Sir:

If you have the reference data, it should be an easy thing to do in any spreadsheet application.

Add new comment